what model are you using from anthropic? i can check

what model are you using from anthropic? i can check

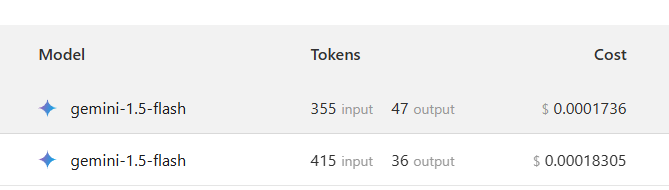

models

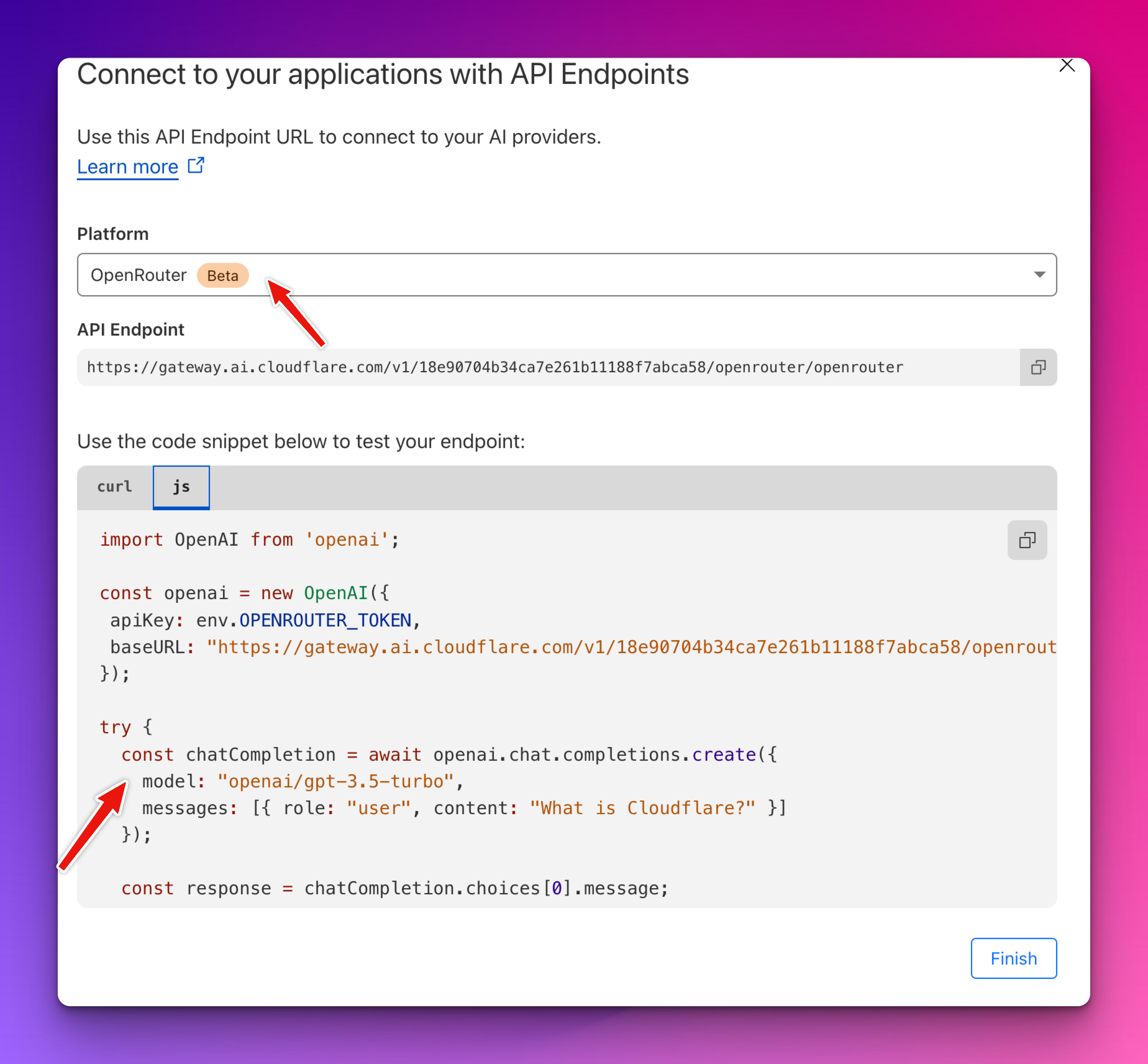

openai

X

•1/30/25, 6:36 PM

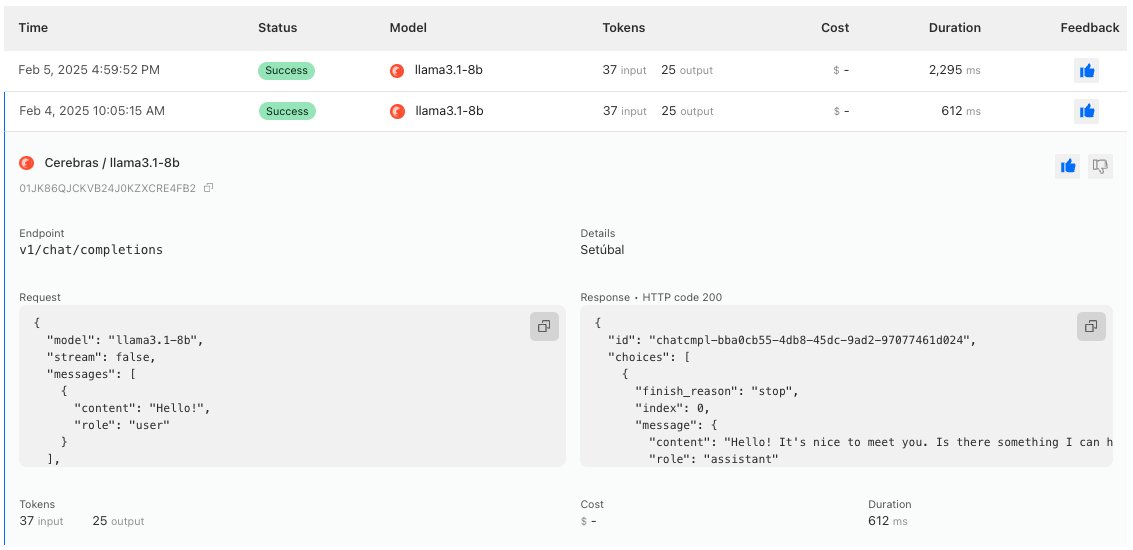

We've got 2 new exciting updates for AI Gateway!

We've got 2 new exciting updates for AI Gateway!

X

•2/6/25, 10:55 PM

// See "Model Routing" section: openrouter.ai/docs/model-routing

models?: string[];