has anyone used for pushing data onto a kafka queue?

has anyone used for pushing data onto a kafka queue?

max: 5 instead of max: 1 for connections? https://developers.cloudflare.com/hyperdrive/configuration/connect-to-postgres/#postgresjs

max: 1 is probably better as it's fewer moving parts to debug if you do run into issues.

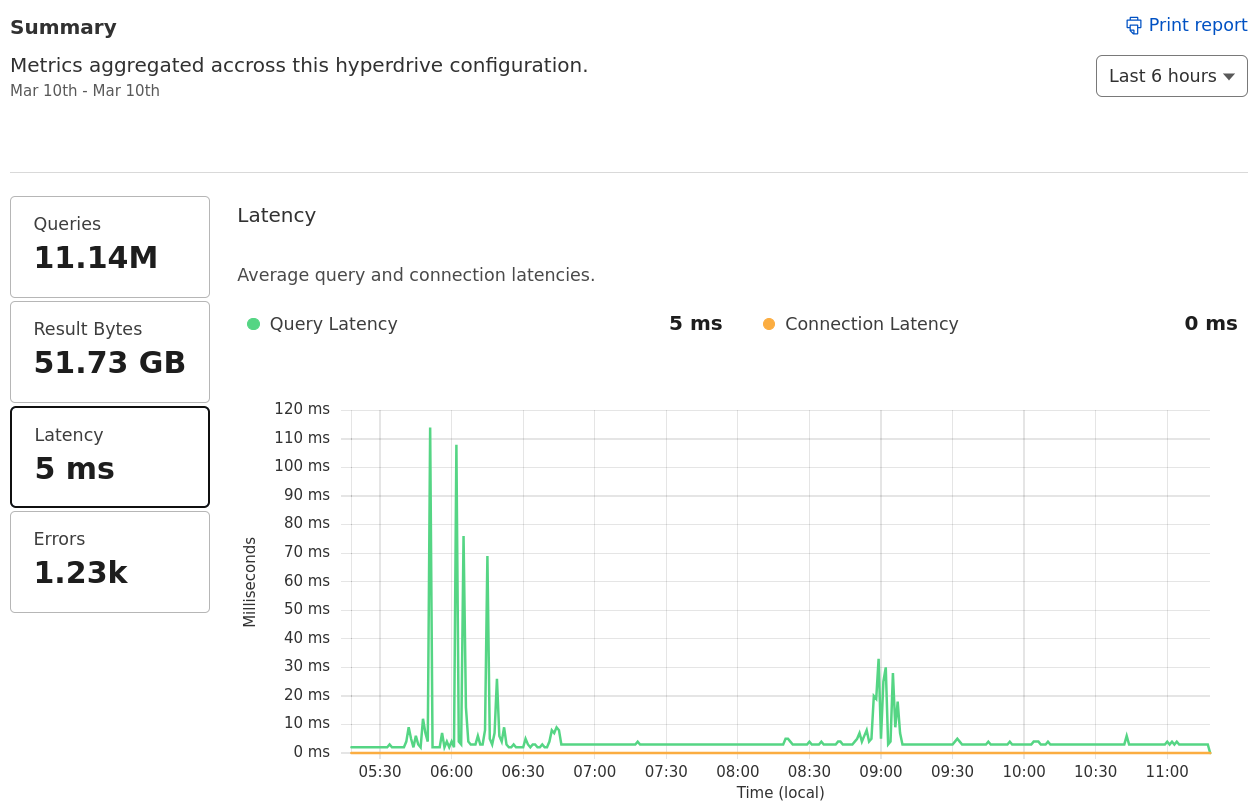

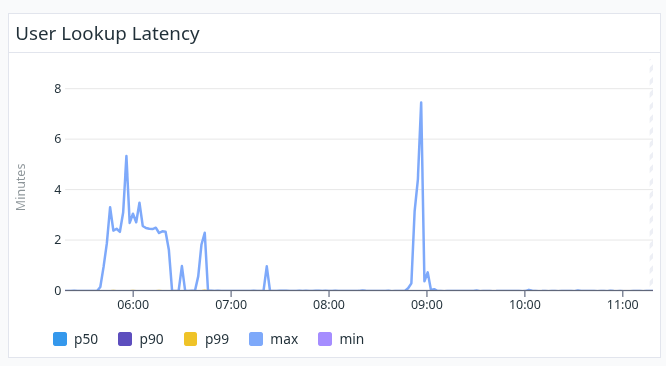

{ connect_timeout: 1, max: 5 } and also have some setTimeout( helpers wrapping the whole function issuing the query -- but still see some successful queries record latencies of multiple minutes sometimes

statement_timeout . Cut out all the interactions across the stack and just have queries get kiboshed if they run too long.

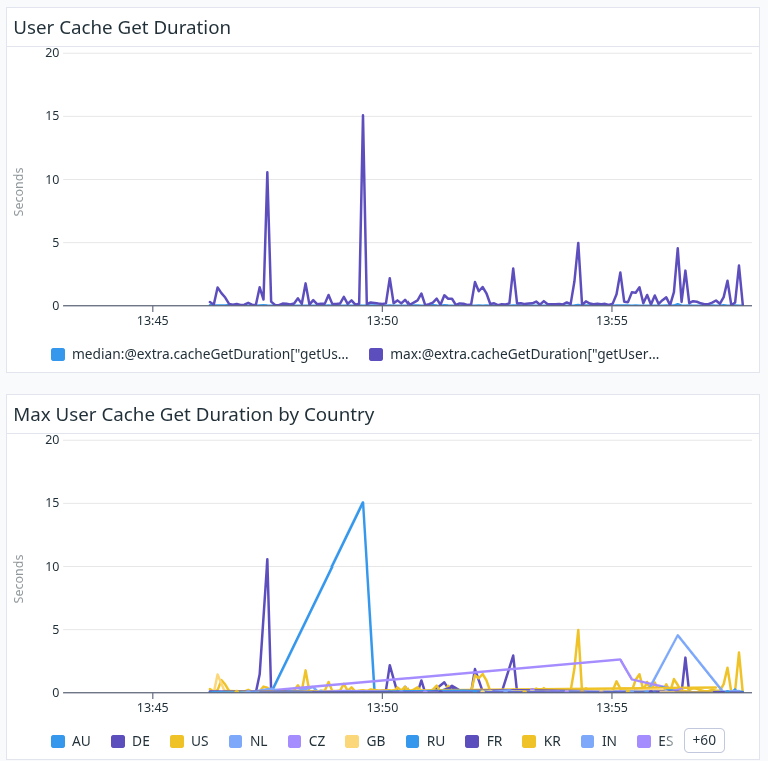

setTimeout( wrappercache.get( call that was taking a butt load of time -- so just gotta make sure we wrap all those with timeouts

Error: write CONNECTION_CLOSED [some value].hyperdrive.local:5432 on 100% of my requests to a Hyperdrive private database. I think it might be coming from a database error because I've just modified the schemas, but don't see anywhere to view a more specific error message. Is there somewhere where that will be logged?cloudflared tunnel diag and that resulted in errors

sql template or .unsafe() for postgres.js). It then works for most queries, but on repeated ones it'll often throw a connection error. The best way to get it to work reliably is to throw an error after your query (like by running ()), and Clickhouse Postgres resets the entire connection on all errors, so you'll get a new conn on the next attempt, rip connection pooling though. It works fine for my limited use of small data querying though, and connecting securely through a tunnel.fetch_types but no dice

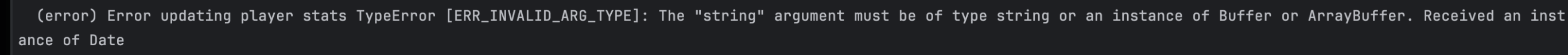

jsonb in some casesmax: 5max: 1max: 1{ connect_timeout: 1, max: 5 }setTimeout(setTimeout(statement_timeoutwrite CONNECTION_CLOSED 725fa92510fc468cd02c82f3759d365f.hyperdrive.local:5432Internal error.cache.get(Error: write CONNECTION_CLOSED [some value].hyperdrive.local:5432cloudflared tunnel diagsql()fetch_typesjsonb