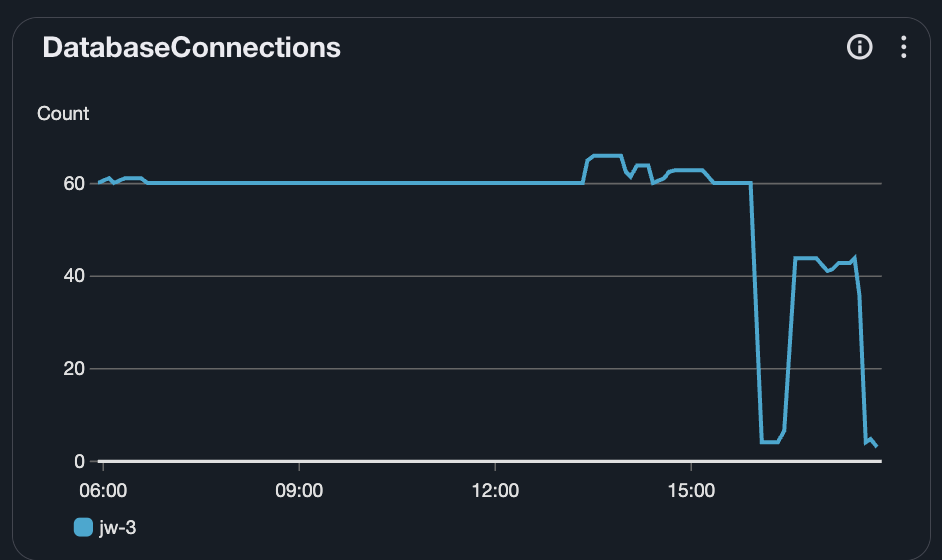

Oh, I think I have run into this actually.. does this translate to about 60 concurrent Workers then?

Oh, I think I have run into this actually.. does this translate to about 60 concurrent Workers then? Or more?

{ connect_timeout: 1, max: 5 }setTimeout(

statement_timeout

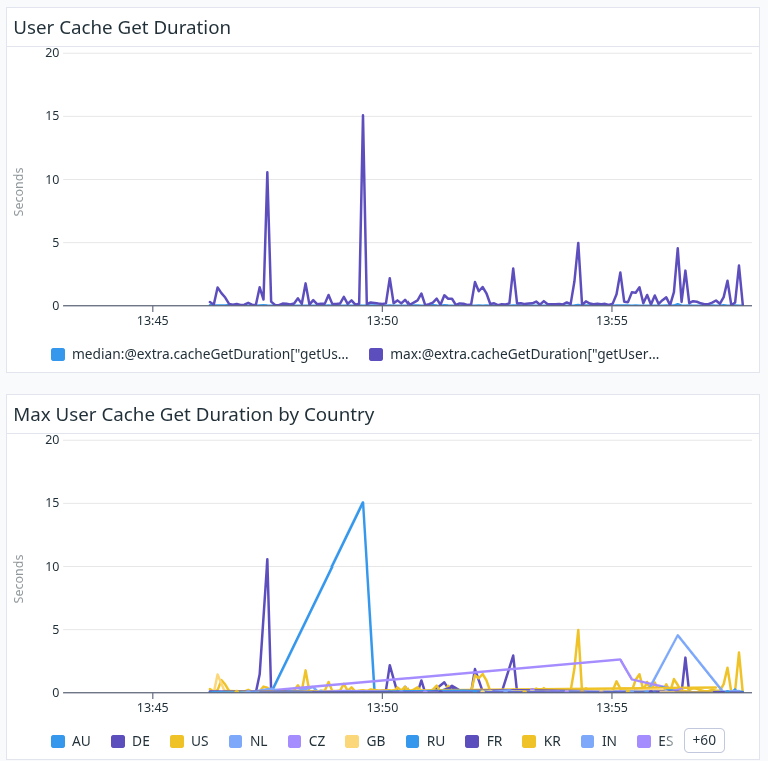

setTimeout(cache.get(

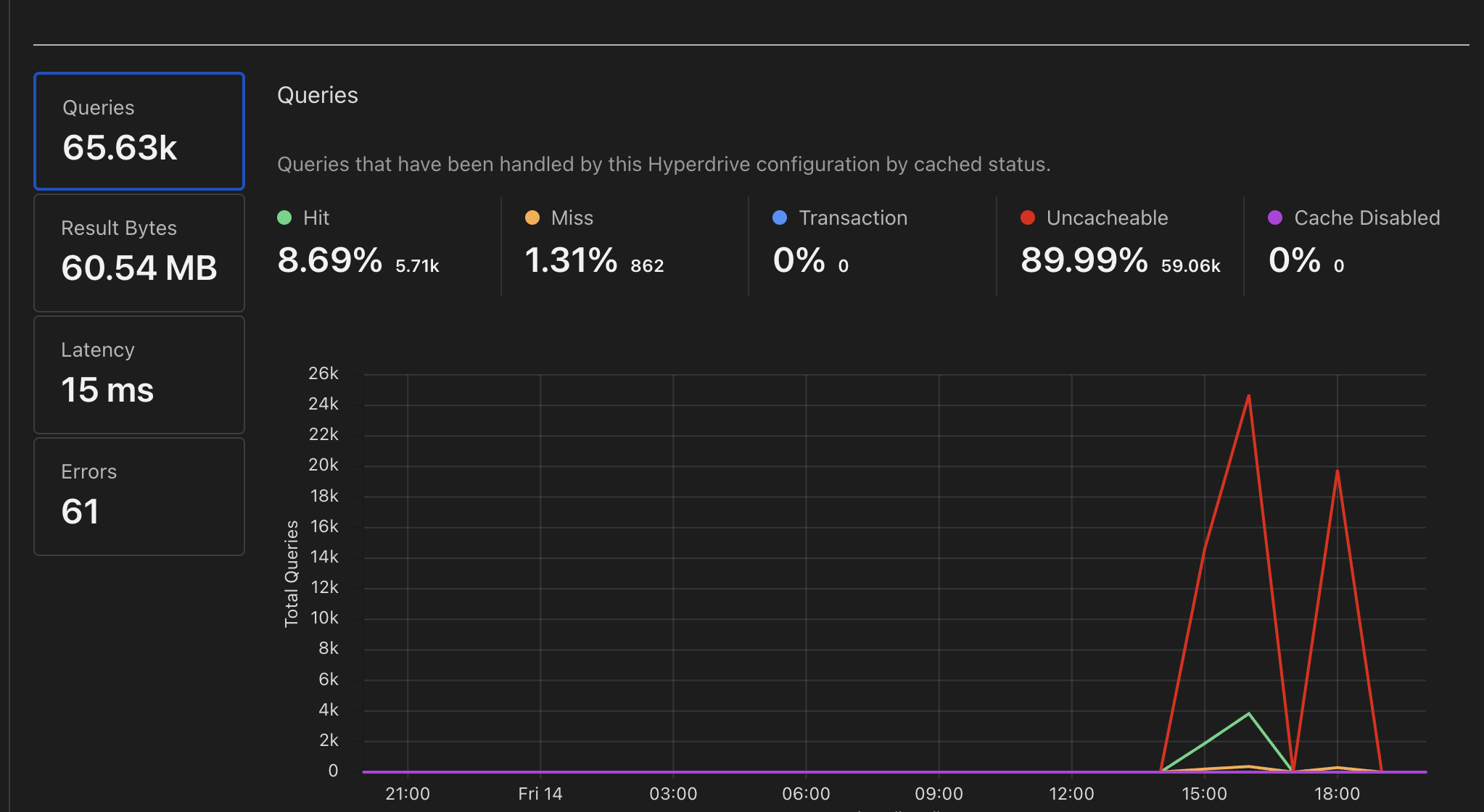

Error: write CONNECTION_CLOSED [some value].hyperdrive.local:5432cloudflared tunnel diag

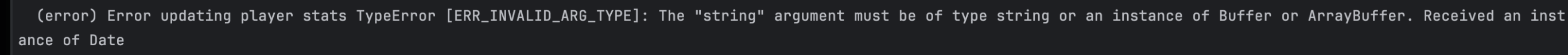

sql()fetch_types

jsonb

export function createDbConnection(databaseUrl: string) {

const sql = neon(databaseUrl);

return drizzle(sql, { schema });

}export function createDbConnection(hyperDrivedatabaseUrl: string) {

client = postgres(hyperDrivedatabaseUrl,{

max: 1,

fetch_types: false,

},);

return drizzle(client, { schema });

}