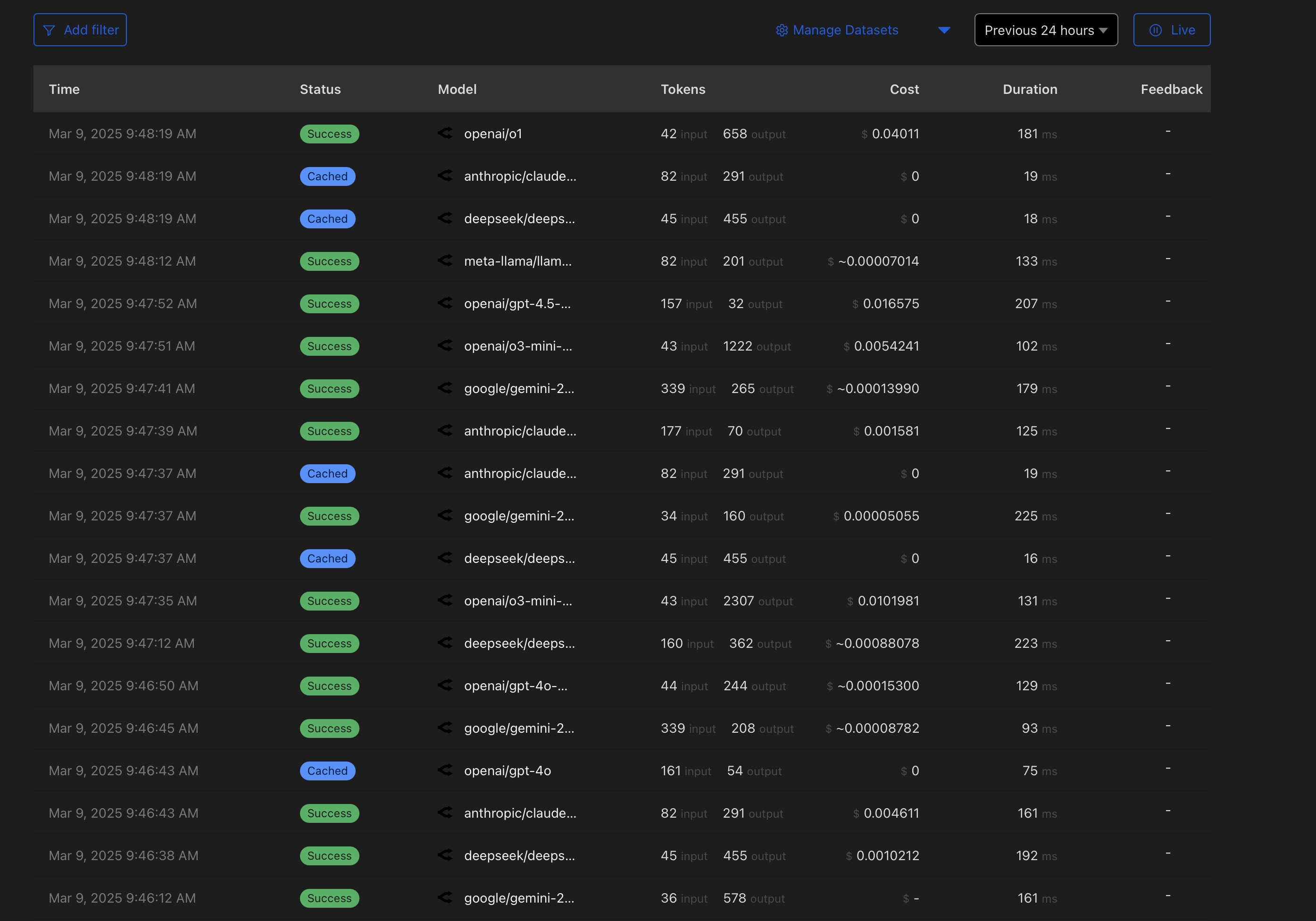

When using openrouter, the duration values are way lower than reality ... many of these requests are

When using openrouter, the duration values are way lower than reality ... many of these requests are taking multiple seconds (or even 10s of seconds) ... and even the fastest ones are coming back in mid-to-high hundreds of milliseconds while AI Gateway isn't reporting anything over 225ms ...

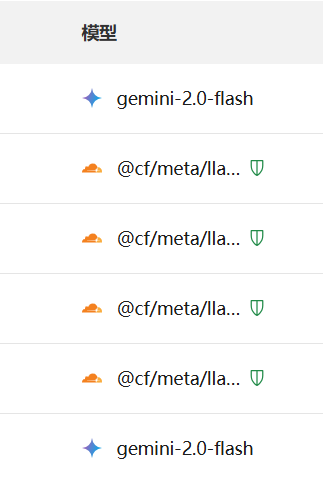

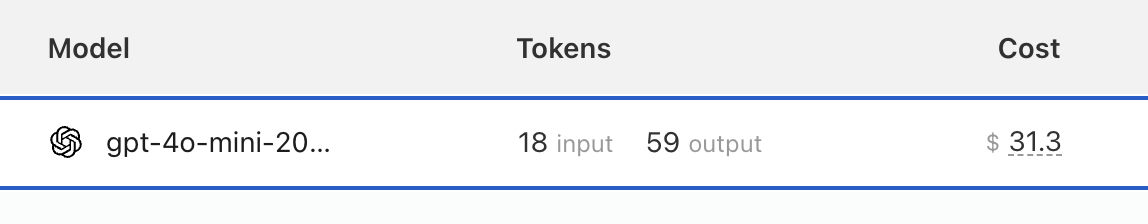

The other [minor but annoying] issue with AI Gateway + OpenRouter is that the model names are cut off very short, and given the

The other [minor but annoying] issue with AI Gateway + OpenRouter is that the model names are cut off very short, and given the

{model}:{keyword}