ComfyUI serverless

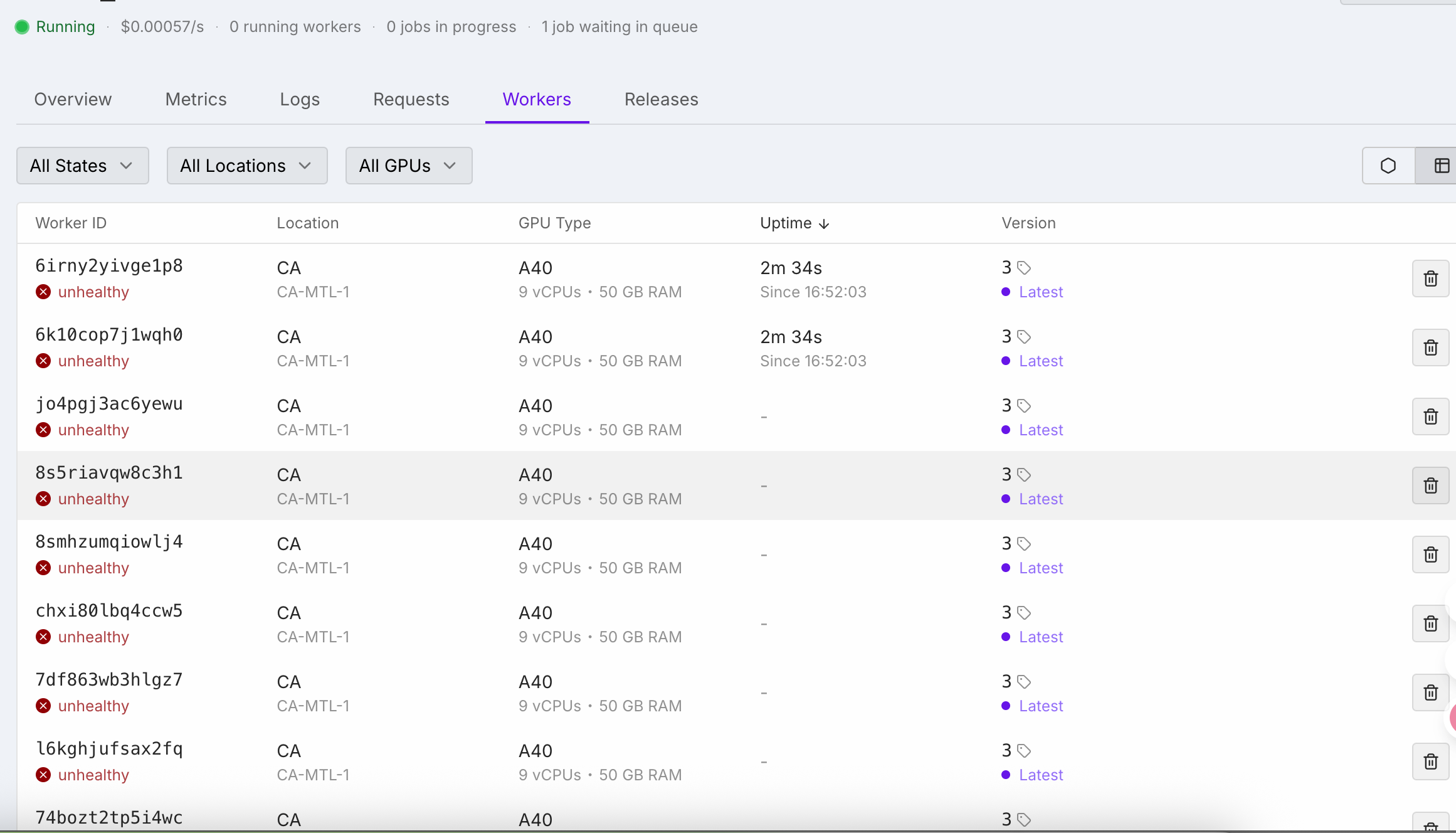

Random EGL initialization errors

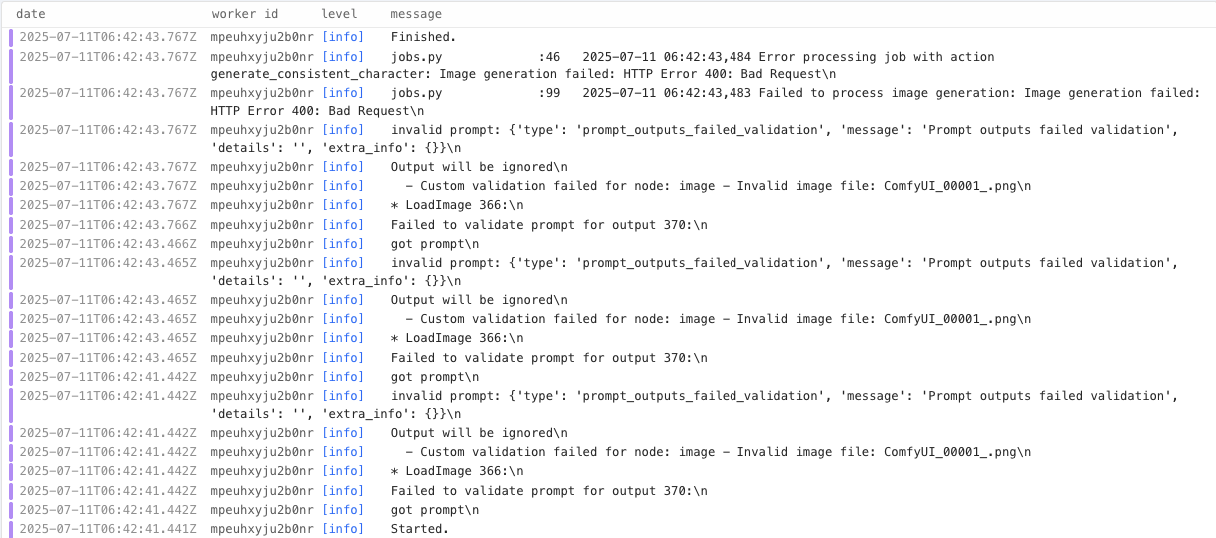

prompt_outputs_failed_validation - Serverless workflow broke suddenly without code changes

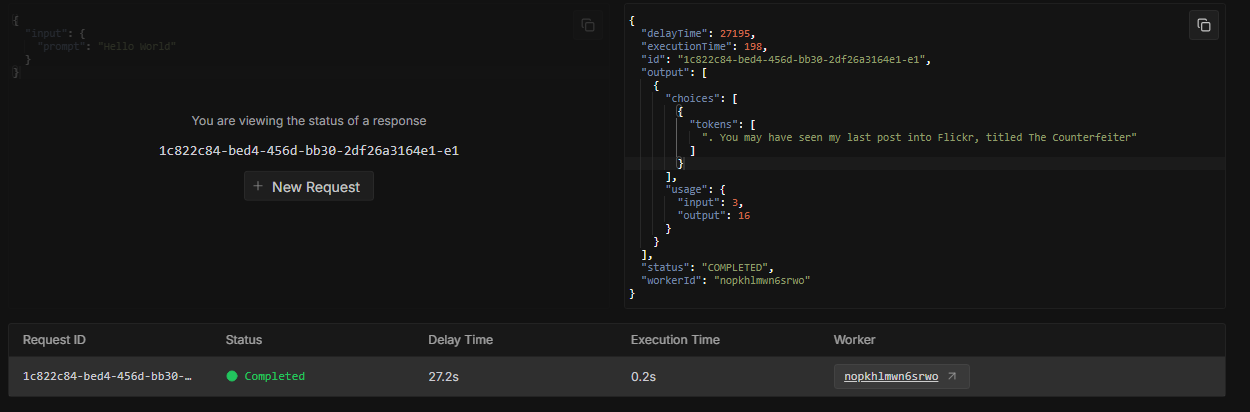

Serverless Instance Queue Stalling

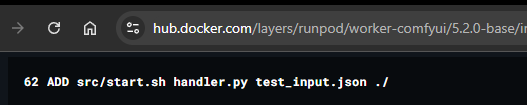

Why test_input.json is a must?

ComfyUI frontend to work with serverless?

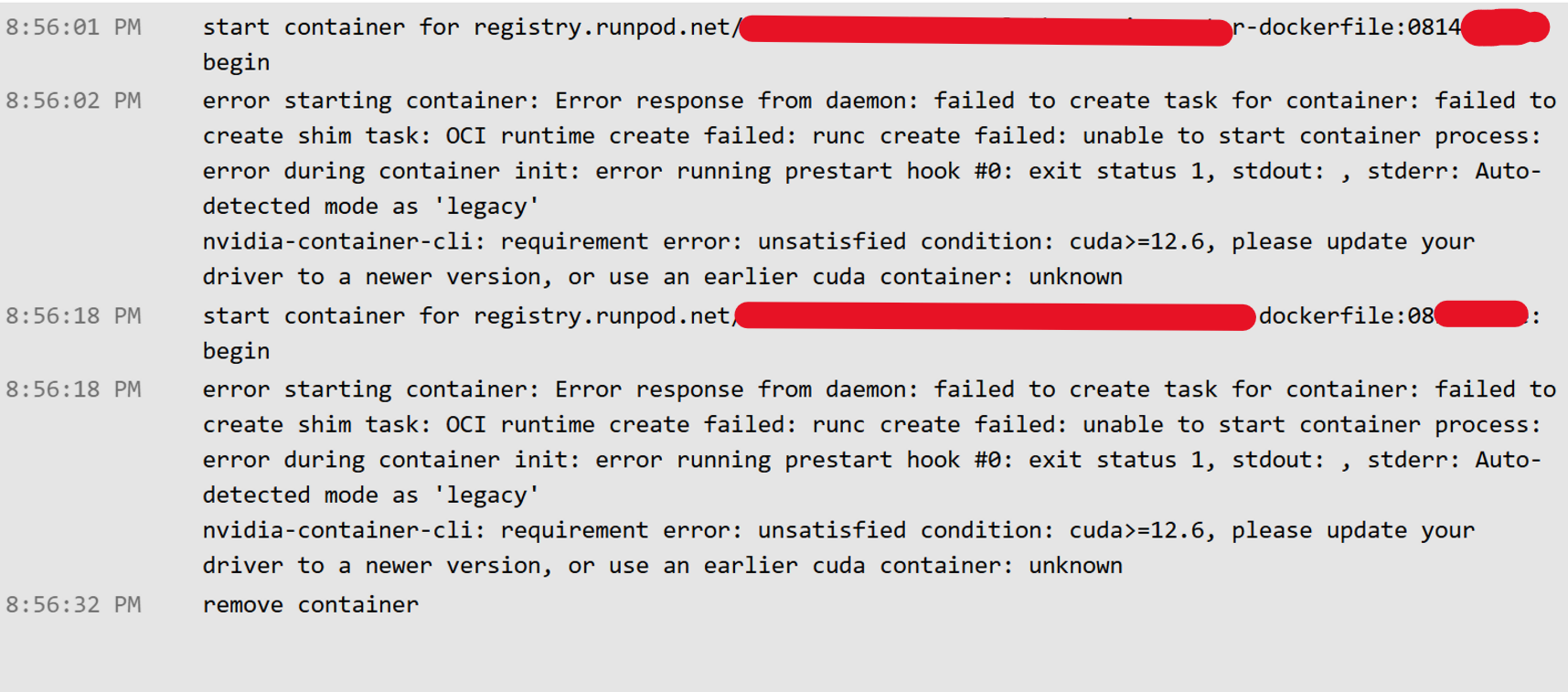

Containers silently charge users while stuck in infinite CUDA compatibility failure loop

Is there a way to lock serverless down to a specific IP range/CIDR?

Serverless Github with Huggingface token

Serverless Worker Fails: “cuda>=12.6” Requirement Error on RTX 4090 (with Network Storage)

Stuck at "loading container image from cache" in a loop for 3 hours

Low H200 availability for all storage network regions

Get TorchCompile working in serverless with Flux/ComfyUI

How do I bust the cache on Serverless builds

Runpod S3 multipart upload error

serverless payment

Network Volume Attached but not really?

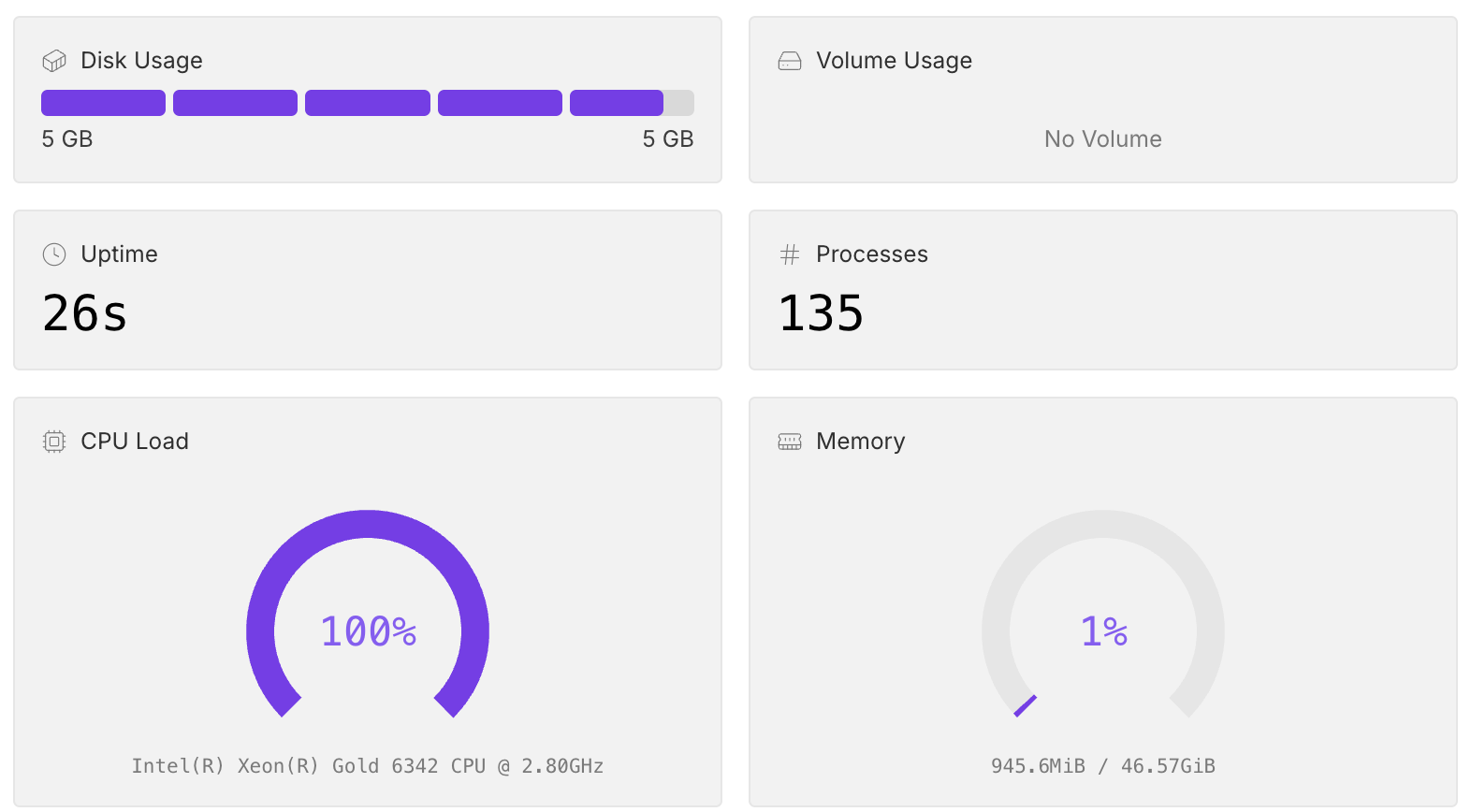

/usr/local/lib/python3.12/dist-packages/huggingface_hub/file_download.py:799: UserWarning: Not enough free disk space to download the file. The expected file size is: 3077.77 MB. The target location /workspace/.hf-cache/hub/models--Menlo--Jan-nano-128k/blobs only has 273.49 MB free disk space.

/usr/local/lib/python3.12/dist-packages/huggingface_hub/file_download.py:799: UserWarning: Not enough free disk space to download the file. The expected file size is: 3077.77 MB. The target location /workspace/.hf-cache/hub/models--Menlo--Jan-nano-128k/blobs only has 273.49 MB free disk space.