With OpenRouter, I can use the key `models` to send up to 3 models and OpenRouter will pick one base

With OpenRouter, I can use the key

However, I can't see this in the Proxy options. Is that supported yet?

Docs: https://openrouter.ai/docs/requests#request-body

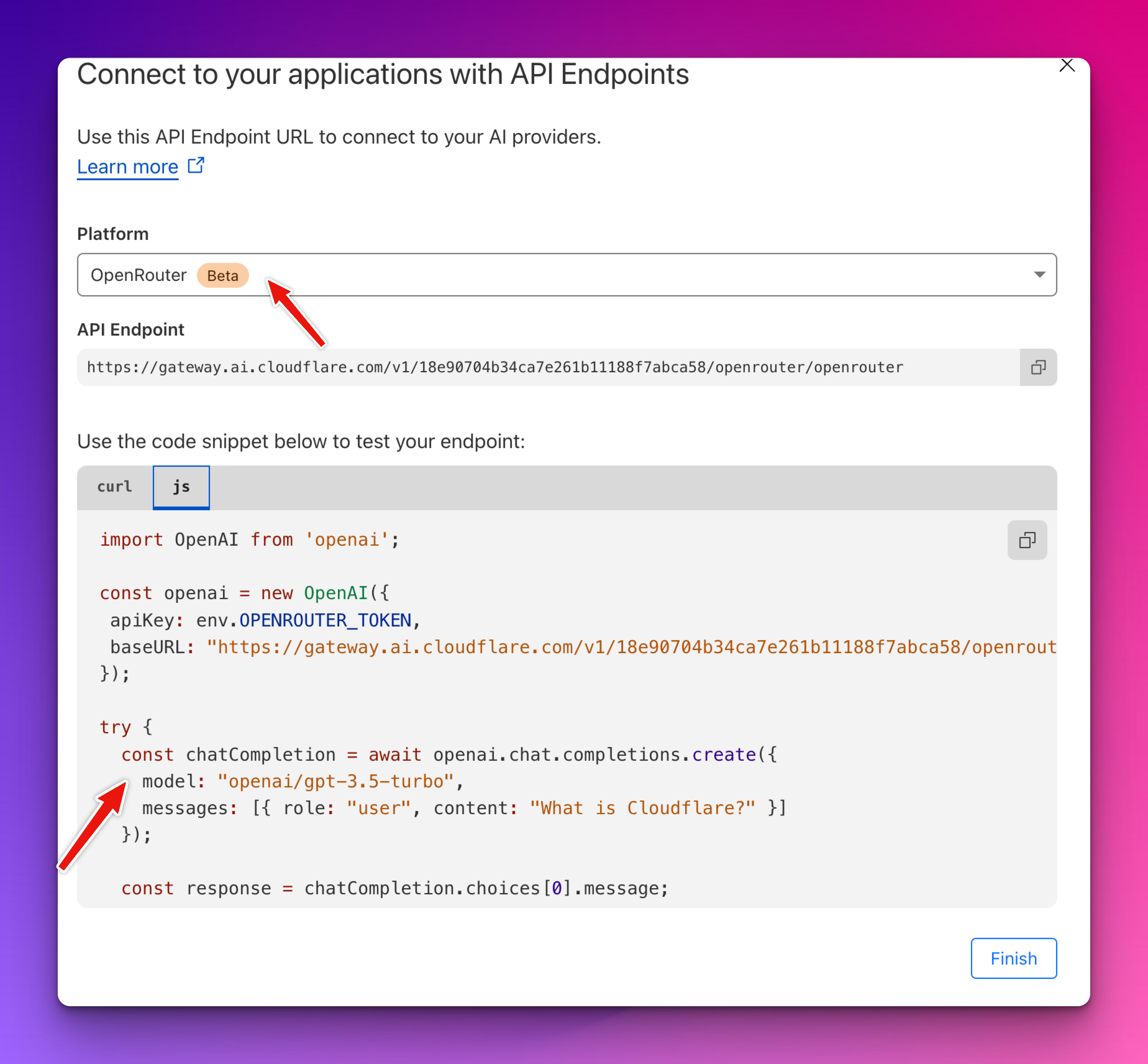

Example:

models to send up to 3 models and OpenRouter will pick one based on availability. So load balancing is already managed by them.However, I can't see this in the Proxy options. Is that supported yet?

Docs: https://openrouter.ai/docs/requests#request-body

Example: