workers-and-pages-help

pages-help

general-help

durable-objects

workers-and-pages-discussions

pages-discussions

wrangler

coding-help

kv

🦀rust-on-workers

miniflare

stream

general-discussions

functions

zaraz

⚡instant-logs

email-routing

r2

pubsub-beta

analytics-engine

d1-database

queues

workers-for-platforms

workerd-runtime

🤖turnstile

radar

logs-engine

cloudflare-go

terraform-provider-cloudflare

workers-ai

browser-rendering-api

logs-and-analytics

next-on-pages

cloudflare-ai

build-caching-beta

hyperdrive

vectorize

ai-gateway

python-workers-beta

vitest-integration-beta

workers-observability

workflows

vite-plugin

pipelines-beta

containers-beta

hello everyone I have a doubt related to

One message is not going to hold up the

Hey team ! I'm trying to edit the

Is the queues product actually

Will you add these new metrics to the

I’m not sure I understand exactly, but

Message queue, if workers are not used,

Feeling like alone on the problem here

I'm getting `Queue sendBatch failed: Bad

Queue sendBatch failed: Bad Request despite my requests being correctly shaped.

I am sending 1000's of messages in multiple parallel sendBatch requests however. Is it possible this is the 5000 messages produced per second limit instead?...I really would like to use Cloudflare

How is the consumer location chosen? Is

is it within the road map for http push

On the worker queue producer side, I

await env.QUEUE.send() ? From testing , I noticed "warmed up" invocations to the worker wouldn't have this issue but when it's idling after a while it would (for a good amount of time) just spins itself down before sending to the await env.QUEUE.send() call.

request IDs affected:

930d4183797951e2

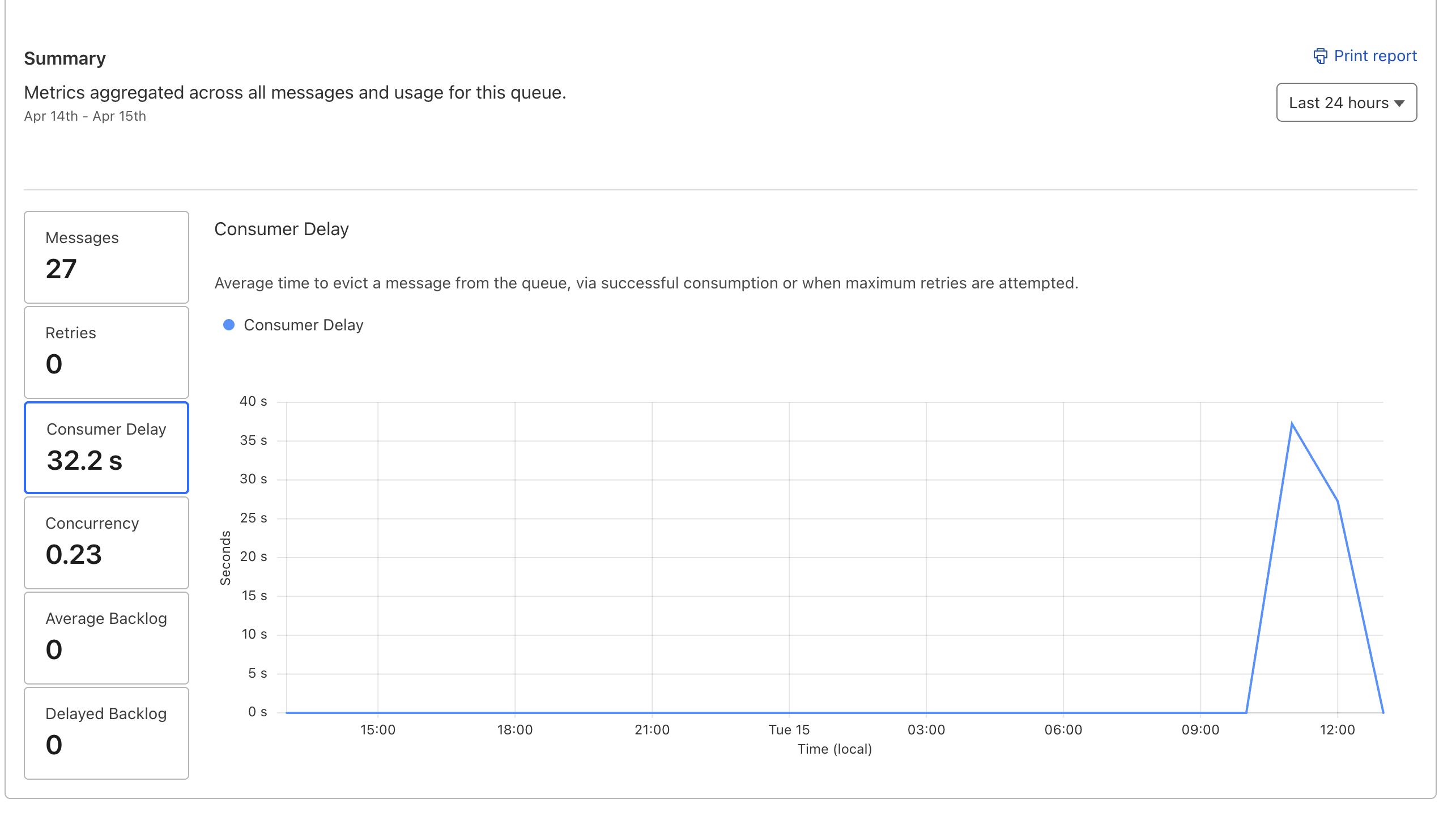

930d2b064f611f41...Same problem here, `Consumer Delay` is

Consumer Delay is about 32 seconds. My configuration:

```

max_batch_size = 1

max_batch_timeout = 0...

Hi, what kind of consumer delays are

Is Queues going to be getting any love

Super cool. A few of questions:

Pretty sure something got broken with

internal error; reference = odmj851jl3gua27r036349h7Quick question if I may, but will queues

Is there any issue with queue? because